Oracle vs. AWS Block Volumes

In 2022, Oracle Cloud Infrastructure (OCI) was recognised as a Visionary by Gartner Magic Quadrant. Over the past few years, I have been using OCI for various projects, such as Teach Stream, the educational remote learning platform and have been thoroughly impressed by the service and customer support.

However, most of my contract work comes from AWS deployments. After a recent project working with some beefy RDS instances, I wondered how a similar block storage configuration would behave in OCI, so let's compare.

In this article, let's focus on a reasonably performant disk and the associated cost. A 2TB volume provisioned to 50,000 IOPS sounds reasonable for an average workload. We also require consistent baseline performance (no unpredictable bursting behaviours).

What is Cloud Block Storage?

Cloud Block Storage is a service provided by Cloud operators which exposes virtual block volumes to bare metal or virtual instances running on their platform. This article explicitly discusses the performance of network-attached block storage instead of locally attached NVMe storage (typically ephemeral in cloud environments due to its direct-attach nature).

Block Storage services come with several advantages, including:

- Adjustable throughput and capacity - the storage service provides adjustable throughput, IOPS and latency by provisioning your volume on specific hardware.

- Improved reliability - your volume is replicated across multiple underlying nodes, reducing the chance of failure compared to running on raw disks.

- Persistence and Flexibility - As the volume is not tightly coupled with the instance, the instance can be stopped and deleted without deleting the volume. The volume can be reattached to another instance or, with some providers, shared across multiple instances for a NAS-like clustered filesystem.

However, the biggest drawback with Cloud Block Storage solutions is the inescapable performance downside of network-attached storage. The instance must communicate over a network to access the block volume, increasing round-trip latency per IO operation. Additionally, to push considerable throughput to a volume, a high-capacity network is required; for example, to push 4GB/s (achievable by modern NVMe 4.0 SSDs) requires 32Gbps of network throughput - that's no small feat when thousands of customers are lining up.

In addition to this, instance types typically have various block storage limits; after all, instances are CPU and network-constrained resources. As a result, when provisioning large, high-performance volumes, it's crucial an appropriately sized instance is chosen, which can come at an additional expense. Otherwise, you are throwing money down the drain.

Other features of the block volume services also come into play when comparing services and choosing a cloud provider, such as snapshotting (OCI, AWS) and cloning (OCI). These additional features are beyond the scope of this article but might be covered in the future - subscribe for updates!

OCI Block Volumes

OCI Block Volumes provide NVMe SSD-backed storage with a simple Performance Unit (VPU) per GB configuration for throughput and IOPS. The product page boasts up to 32TB volumes, 300,000 IOPS, 2,680 MB/sec of throughput per volume, a performance SLA and 99.99% annual durability.

OCI Block Volumes offer the only storage performance SLA among large cloud providers.

The Block Volume service officially supports para-virtualised and iSCSI attachments, with newer OS images supporting iSCSI as the preferred option for maximum IOPS performance.

Block volumes can be mounted as read-write, read-write (shareable - used with a cluster-aware clustered filesystem), or read-only. All volumes are encrypted by default with AES-256. Cross-availability domain and cross-region asynchronous replication are available to support DR and other scenarios.

The VPU option comes in various selectable options, including:

- Ultra High Performance (Max: 2,680MB/s | 300,000 IOPS)

- Higher Performance (Max: 680MB/s | 50,000 IOPS)

- Balanced (Max: 480MB/s | 25,000 IOPS)

- Lower Cost (Max: 480MB/s | 3,000 IOPS)

The Volume Performance Units documentation has a great table for the expected IOPS and throughput expected from volumes based on these options and limits for different types of instances.

Storage is charged at $0.0255 GB-month, and VPUs are charged depending on the volume size.

To meet our scenario, a High-Performance volume would suit. Using the OCI cost calculator, we can see a 2TB 20 VPU volume would provide 50,000 IOPS and 680 MB/s throughput.

To support 50,000 IOPS, OCI recommends an instance type with >= 8 OCPUs. On the E4 Flex instance type, 1 Gbps network capacity is provisioned per OCPU, giving up to 8 Gbps throughput. 680MBps = 5.44Gbps, so this should be sufficient.

It's worth highlighting here that with Oracle iSCSI Block Volumes, your storage traffic appears to be shared with network traffic, unlike EBS Optimised AWS instance types. As a result, your disk might be contending with user traffic if your instance is not adequately sized.

2048GB - 20 VPU - High performance

50,000 IOPS | 680 MBps

Volume Cost: $121.86/month

VM.Standard.E4.Flex

8 OCPU (16 AMD cores)

8 GB RAM

8 Gbps Network

Instance Cost:$157.73/month

Total Cost: $279.59/month

For the test case, we will use Canonical Ubuntu 22.04, encrypted-in-transit, iSCSI Attachment with CHAP credentials, and the Oracle Cloud Agent to automatically connect to iSCSI-attached volumes.

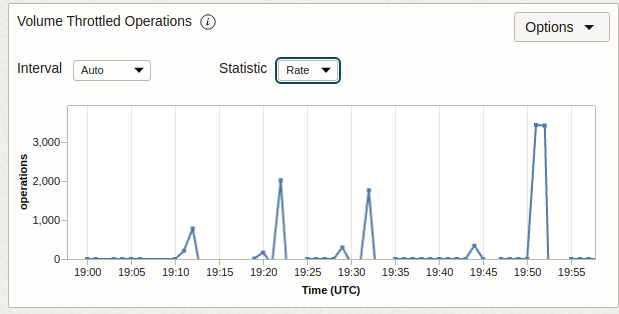

As an additional perk, OCI offers graphs highlighting any IO or network throttling on your instance and block volume so you can easily identify bottlenecks. We expect this to happen during our benchmarking, proving we are maxing out the provisioned capacity of the disk.

The fio Linux command we are running uses a block size 256k for Throughput tests and 4k for IOPS and Latency tests. Latency tests do not stress the disk to the point of throttling, which results in increased latency to delay subsequent requests. Specific commands can be found at the end of the article.

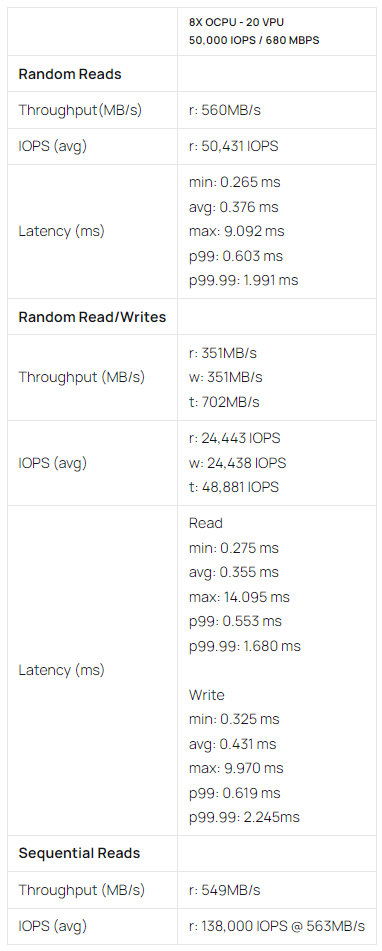

The results are below, with r (read), w (write) and t (total) where appropriate.

Overall, the volume we provisioned worked well. The expected IOPS were reached without issue and remained consistent throughout the tests. The lowest throughput we received during testing was 549MB/s, 80% of the provisioned throughput, whilst we exceeded the provisioned throughput on the read/write test.

Looking at the worst p99 latency measured, users with this configuration can expect 99% of IO requests to be completed within 0.6ms. It is a respectable latency for a setup, costing just $279/mo.

Can we have more?

Let's try the testing again with the following configuration, with a larger instance type capable of doing some considerable number crunching.

2048GB Ultra High Performance Block Volume (120VPU)

300,000 IOPS

2,680 MB/s (21.4 Gbps)

Volume Cost: $470.02/month

E4.Flex

24 OCPU equals 48 vCPU

96GB Memory

24Gbps Network (SR-IOV Networking)

Instance Cost: $553.54/month

Total Cost: $1,027.80 / month

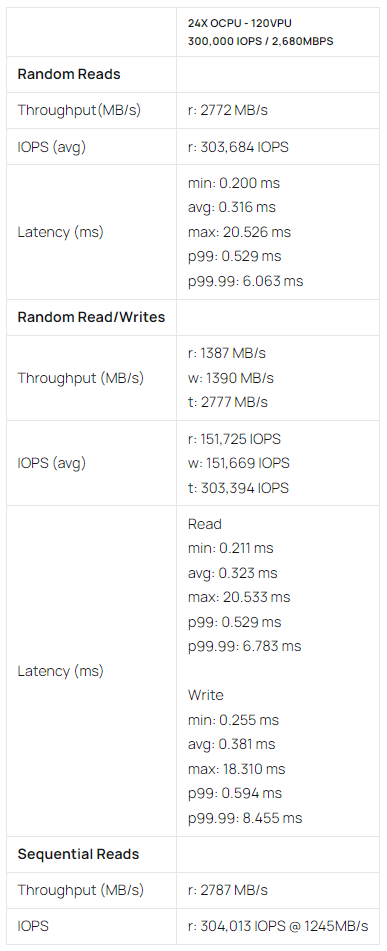

Ultra High-Performance volumes connect via iSCSI with multipathing, requiring us to use the multipath mapper device for the highest performance across multiple distinct network paths to the volume.

This configuration boasts impressive performance, especially considering the price point of $1,027.80/month. In all the tests, throughput and IOPS exceeded the provisioned values. p99 latency was acceptable while the p99.99 and maximum recorded at 8.5ms and 20.5ms respectively.

Both the test cases here show OCI Block Volumes can deliver at a very palatable price point.

Amazon Elastic Block Storage

Amazon Elastic Block Storage was released in 2008 to support the growing EC2 platform and has been one of the underpinning services to AWS's stronghold in the industry. Whilst the details are under wraps, the modern EBS deployments comprise a dedicated storage network, indicated and documented by the fact that each Nitro instance type has dedicated guaranteed EBS throughput, separate from network connectivity.

On instances without support for EBS-optimized throughput, network traffic can contend with traffic between your instance and your EBS volumes; on EBS-optimized instances, the two types of traffic are kept separate. - EBS Performance Documentation

AWS documentation refers to all its storage units as MiB (Mebibyte = 2^20 = 1,048,576 bytes). Whereas Oracle uses MB (Megabyte = 1,000,000 bytes), the units used throughout the article reflect the correct usage of units and have been converted where appropriate for easy comparison between the test results.

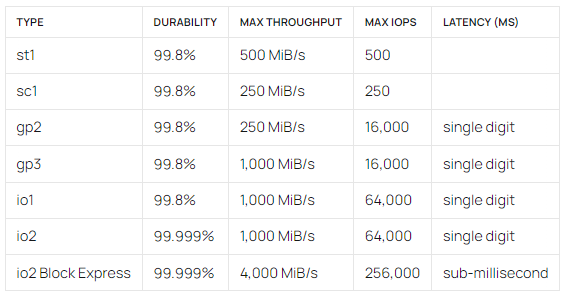

The EBS service offers a wide collection of volume types for a customer's workload, from spinning disks and general-purpose SSDs, to high-performance provisioned IOPS SSDs.

Storage, throughput and IOPS can be charged separately depending on the volume type; for example, a gp3 volume storage costs $0.08/GB-month, and io2 $0.125/GB-month. GP3 IOPS cost $0.005/IOPS-month, and io2 starting at $0.065/IOPS-month.

A quick overview of the offer as of September 2023:

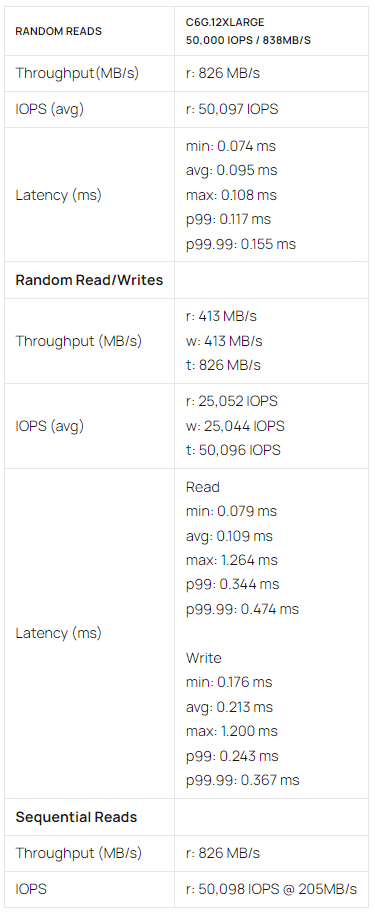

To meet our scenario of 50,000 IOPS, an io2 volume running on a c6g.12xlarge should work.

TOP TIP: https://instances.vantage.sh/ is a great place to discover all the details about specific instance types. Clicking on the API Name, gives you information such as EBS Burst and baseline capacity - a MUST HAVE tool if working with any IO workloads on AWS.

2048GiB | 50,000 IOPS | 800MiB/s (838 MB/s)

2,048 GiB x 0.125 USD = 256.00 USD

32000 iops x 0.065 USD = 2080.00 USD

18000 iops x 0.0455 USD = 819.00 USD

Total tier cost: 2080.00 USD + 819.00 USD = 2899.0000 USD

Total Volume Cost: $3,155.00/month

The cheapest instance size to support 50,000 IOPS block storage:

c6g.12xlarge 48vCPU | 96GB | 14.25Gbps EBS | 50,000 IOPS |

Instance Cost: $1,191 /month

Total Cost: $4,346/month

The test case uses the Ubuntu 22.04 AMI, with encrypted volumes.

There is no doubt an IO2 volume performs to an impressive standard. The consistency across the results stands out, where throughput, total throughput and IOPS across all load types are limited to the same value within a few units.

The latency results are equally impressive, with 99.99% of requests completed within 0.474ms, considerably more consistent than the OCI test cases and on average 3x faster (0.3 -> 0.1ms) than OCIs Ultra High-Performance iSCSI multipath latency.

This is likely all possible due to EBS's dedicated storage network, providing non-blocking storage dedicated networking for Nitro instance types, and I have no doubt this scales to the impressive consistent limits of io2 Block Express, given you provision an instance large enough.

There is no denying that the AWS test results prove you get the volume you pay for, but you need a significant investment in AWS to push these numbers.

Comparison and Conclusion

We've proved that both cloud providers can deliver performance for provisioned volumes, with AWS being more consistent in tail-end latencies. However, let's take a look at the price differences between these cloud providers.

To summarise, we found the following:

- Overall, OCI block storage is considerably cheaper than any SSD option provided by AWS, and its performance delivers.

- The tail-end latencies of AWS io2 volumes are significantly better than OCI's offerings. io2 volumes deliver more consistent latency than OCI volumes.

- At 50,000 IOPS, an OCI setup (with a compatible instance) can cost $279.59/month. To get a similar block volume setup with AWS would cost $4,346/month.

- An OCI E4.Flex 24OCPU (48vCPU & 96GB RAM) instance costs $553.54/month and can max out the maximum performance OCI volumes offer (recorded at 2787MB/s & 303,394 IOPS).

- An AWS c6g.12xlarge with 48vCPU and 96GB RAM instance costs $1,191/month and is documented to have an EBS throughput limit of 1781MB/s and 50,000 IOPS.

To provide food for thought, an instance capable of pushing 2787MB/s (22.3Gbps) and 300,000 IOPS, such as the c6in.metal or m6in.metal would cost around $6,504/month. io2 Express volumes have a maximum of 256,000 IOPS, so two running at 150,000 IOPS and 1TB each (configured using RAID0) would cost $12,806/month, for a total cost of $19,310/month.

According to the documentation, such a setup should be able to outperform the throughput of an equivalent OCI volume and would most likely provide a better tail-latency, as we saw in the tests, but the IOPS would still be limited to 300,000. Our OCI test case delivered 300,000 IOPS at just $1,027.80/ month, close to a 19x difference in cost!

It may be argued that locally-attached instance ephemeral SSD would suit better here, which may be true in some cases. However, for workloads such as relational databases, a high-performance persistent disk is typically a hard requirement.

It is clear that OCI's offering is nothing to mock, especially given the price difference between the two cloud providers. If a 0.5ms p99 latency is acceptable for your workload (it probably is), then OCI can be a perfect choice to deliver a high-performance Block Volume for your cloud workload without looking to invest multiple thousands a month for a single instance.

Stay tuned - Subscribe for updates!

Test Commands

| Random Reads | |

|---|---|

| Throughput(MB/s) | sudo fio --filename=/dev/device --direct=1 --rw=randread --bs=256k --ioengine=libaio --iodepth=64 --runtime=120 --numjobs=4 --time_based --group_reporting --name=throughput-test-job --eta-newline=1 --readonly |

| IOPS (avg) | sudo fio --filename=/dev/device --direct=1 --rw=randread --bs=4k --ioengine=libaio --iodepth=256 --runtime=120 --numjobs=4 --time_based --group_reporting --name=iops-test-job --eta-newline=1 --readonly |

| Latency (ms) | sudo fio --filename=/dev/device --direct=1 --rw=randread --bs=4k --ioengine=libaio --iodepth=1 --numjobs=1 --time_based --group_reporting --name=readlatency-test-job --runtime=120 --eta-newline=1 --readonly |

| Random Read/Writes | |

| Throughput (MB/s) | sudo fio --filename=/dev/device --direct=1 --rw=randrw --bs=256k --ioengine=libaio --iodepth=64 --runtime=120 --numjobs=4 --time_based --group_reporting --name=throughput-test-job --eta-newline=1 |

| IOPS (avg) | sudo fio --filename=/dev/device --direct=1 --rw=randrw --bs=4k --ioengine=libaio --iodepth=256 --runtime=120 --numjobs=4 --time_based --group_reporting --name=iops-test-job --eta-newline=1 |

| Latency (ms) | sudo fio --filename=/dev/device --direct=1 --rw=randrw --bs=4k --ioengine=libaio --iodepth=1 --numjobs=1 --time_based --group_reporting --name=rwlatency-test-job --runtime=120 --eta-newline=1 |

| Sequential Reads | |

| Throughput (MB/s) | sudo fio --filename=/dev/device --direct=1 --rw=read --bs=256k --ioengine=libaio --iodepth=64 --runtime=120 --numjobs=4 --time_based --group_reporting --name=throughput-test-job --eta-newline=1 --readonly |

| IOPS | sudo fio --filename=/dev/device --direct=1 --rw=read --bs=4k --ioengine=libaio --iodepth=256 --runtime=120 --numjobs=4 --time_based --group_reporting --name=iops-test-job --eta-newline=1 --readonly |

| 8x OCPU - 20 VPU 50,000 IOPS / 680 MBps |

|

|---|---|

| Random Reads | |

| Throughput(MB/s) | r: 560MB/s |

| IOPS (avg) | r: 50,431 IOPS |

| Latency (ms) | min: 0.265 ms avg: 0.376 ms max: 9.092 ms p99: 0.603 ms p99.99: 1.991 ms |

| Random Read/Writes | |

| Throughput (MB/s) | r: 351MB/s w: 351MB/s t: 702MB/s |

| IOPS (avg) | r: 24,443 IOPS w: 24,438 IOPS t: 48,881 IOPS |

| Latency (ms) | Read min: 0.275 ms avg: 0.355 ms max: 14.095 ms p99: 0.553 ms p99.99: 1.680 ms Write min: 0.325 ms avg: 0.431 ms max: 9.970 ms p99: 0.619 ms p99.99: 2.245ms |

| Sequential Reads | |

| Throughput (MB/s) | r: 549MB/s |

| IOPS (avg) | r: 138,000 IOPS @ 563MB/s |

| 24x OCPU - 120VPU 300,000 IOPS / 2,680MBPS |

|

|---|---|

| Random Reads | |

| Throughput(MB/s) | r: 2772 MB/s |

| IOPS (avg) | r: 303,684 IOPS |

| Latency (ms) | min: 0.200 ms avg: 0.316 ms max: 20.526 ms p99: 0.529 ms p99.99: 6.063 ms |

| Random Read/Writes | |

| Throughput (MB/s) | r: 1387 MB/s w: 1390 MB/s t: 2777 MB/s |

| IOPS (avg) | r: 151,725 IOPS w: 151,669 IOPS t: 303,394 IOPS |

| Latency (ms) | Read min: 0.211 ms avg: 0.323 ms max: 20.533 ms p99: 0.529 ms p99.99: 6.783 ms Write min: 0.255 ms avg: 0.381 ms max: 18.310 ms p99: 0.594 ms p99.99: 8.455 ms |

| Sequential Reads | |

| Throughput (MB/s) | r: 2787 MB/s |

| IOPS | r: 304,013 IOPS @ 1245MB/s |

| Type | Durability | Max Throughput | Max IOPS | Latency (ms) |

|---|---|---|---|---|

| st1 | 99.8% | 500 MiB/s | 500 | |

| sc1 | 99.8% | 250 MiB/s | 250 | |

| gp2 | 99.8% | 250 MiB/s | 16,000 | single digit |

| gp3 | 99.8% | 1,000 MiB/s | 16,000 | single digit |

| io1 | 99.8% | 1,000 MiB/s | 64,000 | single digit |

| io2 | 99.999% | 1,000 MiB/s | 64,000 | single digit |

| io2 Block Express | 99.999% | 4,000 MiB/s | 256,000 | sub-millisecond |

| Random Reads | c6g.12xlarge 50,000 IOPS / 838MB/s |

|---|---|

| Throughput(MB/s) | r: 826 MB/s |

| IOPS (avg) | r: 50,097 IOPS |

| Latency (ms) | min: 0.074 ms avg: 0.095 ms max: 0.108 ms p99: 0.117 ms p99.99: 0.155 ms |

| Random Read/Writes | |

| Throughput (MB/s) | r: 413 MB/s w: 413 MB/s t: 826 MB/s |

| IOPS (avg) | r: 25,052 IOPS w: 25,044 IOPS t: 50,096 IOPS |

| Latency (ms) | Read min: 0.079 ms avg: 0.109 ms max: 1.264 ms p99: 0.344 ms p99.99: 0.474 ms Write min: 0.176 ms avg: 0.213 ms max: 1.200 ms p99: 0.243 ms p99.99: 0.367 ms |

| Sequential Reads | |

| Throughput (MB/s) | r: 826 MB/s |

| IOPS | r: 50,098 IOPS @ 205MB/s |

Edits

- 02 Oct 2023 - Added a reference for EBS Optimised dedicated network architecture.